How should researchers applying machine learning to quantitative finance address the field’s data limitations, which exacerbate data snooping bias? In their October 2018 paper entitled “A Backtesting Protocol in the Era of Machine Learning”, Robert Arnott, Campbell Harvey and Harry Markowitz take a step back and re-examine financial markets research methods, with focus on suppressing backtest overfitting of investment strategies. They introduce a research protocol recognizing that self-deception is easy. Their goal is that the protocol offers the best way to match or beat expectations in live trading. Based on logic and their collective experience, they conclude that:

- 55 years of broad, high-quality firm/stock data are available. This sample is tiny for most machine learning applications, and impossibly small for advanced methods such as deep learning.

- In this environment, with intensive backtesting, false strategies can survive simple cross-validation (such as a single validation test).

- When data are so limited, economic foundations are critical.

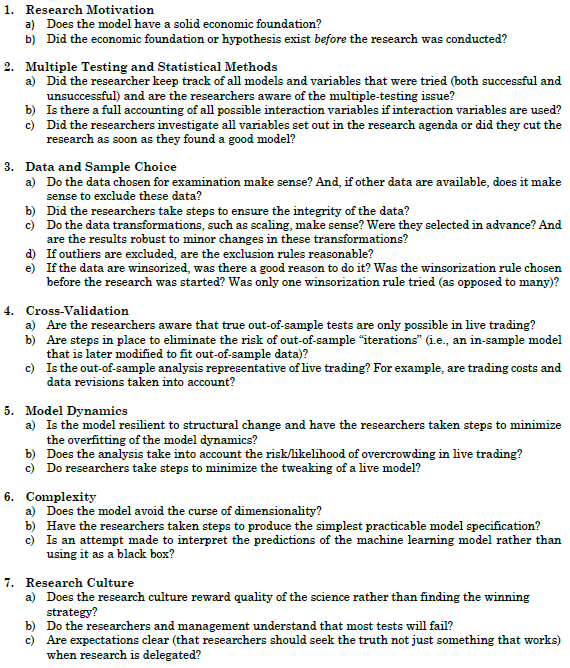

- The proposed research protocol has seven elements:

- Carefully guide machine learning tests by a prior reasonable hypothesis. Any hint that review of data preceded hypothesis development is a red flag.

- Keep track of everything tried, including combinations of variables, as a source of data snooping bias. Understand the secondary bias derived from the entire community of researchers working on similar backtests.

- Specify and clean the training sample in advance, before examining the data. Acknowledge that transformations such as winsorizing/exclusion of outliers and volatility scaling amount to introducing additional variables.

- Understand that researcher experience contaminates any hold-out sample during which the researcher lived, such that true historical out-of-sample data hardly exist. The only truly fresh samples are live trading data. Iterated out-of-sample testing of model variations is overfitting, not out-of-sample testing. All research must account for contemporaneous trading frictions and implementation barriers.

- Understand that studying and refining a model generally increases the mismatch between expectations and true performance. While modifications are a natural response to failure, they generally increase overfitting and may worsen live trading performance.

- Investment managers should not accept machine learning as a black box. The greater the complexity of a model and the greater the reliance on non-intuitive relationships, the greater the mismatch between backtest and live results.

- Organizations/customers should reward researchers for good science, not good backtest results, with the expectation that most tests will fail. Incentivizing good backtests encourages data snooping.

The following protocol summary, extracted from the paper, alternatively summarizes the seven elements.

In summary, the proposed financial markets research protocol balances dual objectives of suppressing false strategies while discovering good ones.

Cautions regarding conclusions include:

- Conventional incentives work against adoption of the proposed research protocol.

- Research on contemporaneous trading frictions and implementation barriers (such as shorting constraints) is thin. These costs/barriers vary considerably across types of investors.

- Costs should also include those for initial research and for recurring research/analyses to support implementation.